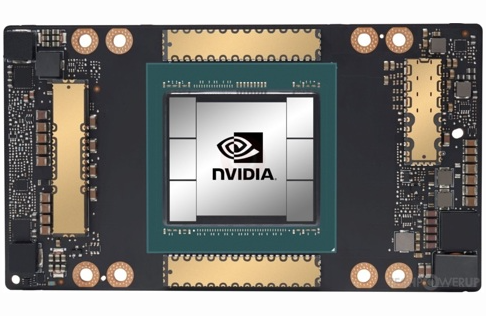

The NVIDIA A100 Tensor Core GPU is at the forefront of the new era of high-performance computing and artificial intelligence. For AI-driven businesses, data centers, and research institutes, this state-of-the-art processor—built with Ampere architecture—offers unparalleled speed, efficiency, and scalability.

The NVIDIA A100 represents a quantum leap in AI hardware, offering an incredible 20x performance improvement over earlier generations. Whether it’s enabling real-time data analytics or training intricate deep-learning models, this chip is transforming how companies, researchers, and engineers push the envelope of what’s feasible.

NVIDIA A100 Key Specifications

| Feature | Details |

|---|---|

| Architecture | NVIDIA Ampere |

| Memory | Up to 80GB HBM2e |

| Memory Bandwidth | 2 TB/s (fastest in the industry) |

| Performance Boost | Up to 20x over previous generation |

| Multi-Instance GPU (MIG) | Can be partitioned into seven independent GPU instances |

| Primary Use Cases | AI, Deep Learning, Data Analytics, High-Performance Computing (HPC) |

| Tensor Core Technology | Supports mixed-precision computing for efficiency |

| Cloud Integration | Works with AWS, Google Cloud, Microsoft Azure |

| Scalability | Efficient across single instances to massive multi-GPU clusters |

🔗 Learn more at: NVIDIA Official Site

Transforming AI Tasks with Unparalleled Efficiency

The complexity of AI models is increasing exponentially, necessitating faster training cycles, bigger datasets, and more processing power. These difficulties are specifically addressed by the NVIDIA A100, which offers multi-precision computing that strikes a balance between speed and effectiveness.

It is possible to divide a single A100 chip into seven separate processing units by utilizing Multi-Instance GPU (MIG) technology. Because of this flexibility, businesses can run multiple AI models at once, greatly increasing productivity and cutting expenses.

Shorter model training times, better data processing, and dynamic workload scaling—a crucial benefit in machine learning, autonomous robotics, and natural language processing—are all benefits for AI researchers.

Cloud and AI’s Future: Why the A100 Is Crucial

More than just a GPU, the NVIDIA A100 is a whole AI ecosystem made to work seamlessly with cloud computing systems. Whether it is used on-site or via hyperscalers such as Microsoft Azure, AWS, and Google Cloud, the A100 offers unparalleled computational efficiency.

Businesses can use cloud-native optimization and large-scale AI and machine learning model deployment with NVIDIA AI Enterprise. This makes AI more affordable and accessible by guaranteeing that companies can scale up or down in response to demand.

The A100’s compatibility with VMware vSphere and NVIDIA-Certified Systems guarantees a seamless transition as businesses adopt hybrid cloud solutions, making it a great investment for businesses hoping to stay ahead in the AI-driven future.

How High-Performance Computing is Being Revolutionized by the A100

The NVIDIA A100 is advancing scientific research beyond AI. This chip’s 2 TB/s memory bandwidth allows it to process large datasets at breakneck speed, making it a vital tool for quantum physics simulations, biomedical research, and climate modeling.

The A100 offers immediate insights for sectors like finance, cybersecurity, and aerospace that depend on real-time data processing, facilitating quicker decision-making and better risk assessments.

It speeds up everything from neural networks to large-scale simulations with third-generation Tensor Cores, enabling researchers to tackle challenging computational issues more quickly than in the past.

Reasons for Companies to Purchase the NVIDIA A100

Investing in AI infrastructure can be difficult for tech companies, startups, and research labs. However, companies no longer require significant hardware investments to remain competitive thanks to the A100’s capacity to optimize workloads, minimize energy consumption, and maximize efficiency.

The A100 guarantees you have the processing power to innovate at scale, whether you’re in e-commerce with recommendation systems, finance with fraud detection models, or healthcare with AI-driven diagnostics.

Because of its dynamic adaptation to shifting workloads, businesses can future-proof their AI investments and stay up with the quick advancements in automation, big data analytics, and machine learning.

The Future is Being Defined by the AI Powerhouse

The NVIDIA A100 GPU is a revolution in high-performance computing and artificial intelligence, not just an improvement. It is rapidly emerging as the industry standard for AI innovation thanks to its state-of-the-art Ampere architecture, industry-leading memory bandwidth, and cloud-ready integration.

The A100 is enabling companies, researchers, and developers to address problems that were previously computationally impossible, from financial modeling to autonomous systems.

Frequently Asked Questions (FAQ) About NVIDIA A100 Chips

1. What makes the NVIDIA A100 different from other GPUs?

The A100 is specifically engineered for AI, deep learning, and scientific computing. It offers up to 20x faster performance, supports multi-instance GPU (MIG) technology, and delivers unmatched memory bandwidth at 2 TB/s.

2. What industries benefit the most from NVIDIA A100 chips?

Industries such as finance, healthcare, cloud computing, scientific research, cybersecurity, and e-commerce rely on the A100 for advanced data processing, AI model training, and real-time analytics.

3. Can the NVIDIA A100 be used for gaming?

The A100 is designed for enterprise-level AI and deep learning. While it is incredibly powerful, it is not optimized for gaming, as it focuses on machine learning workloads rather than graphical rendering.

4. What is the memory capacity of the NVIDIA A100?

The A100 comes with up to 80GB of HBM2e memory, making it one of the fastest GPUs in the industry for handling large-scale AI models and complex computations.

5. How does Multi-Instance GPU (MIG) improve efficiency?

MIG technology divides a single A100 into up to seven independent GPU instances, allowing multiple AI tasks to run simultaneously without performance loss—a major advantage for cloud computing and enterprise AI.

6. Where can I buy an NVIDIA A100 GPU?

The A100 is available through major cloud providers (AWS, Google Cloud, Azure) and NVIDIA-certified hardware partners for enterprise AI solutions.

7. What is the power consumption of the NVIDIA A100?

The A100 consumes approximately 400W of power, making it an efficient choice for large-scale AI training and data center workloads.

8. How does the A100 contribute to cloud computing?

The A100 integrates seamlessly with cloud services, enabling businesses to scale AI workloads dynamically, reducing on-premises hardware requirements while increasing flexibility.

9. What is the price of an NVIDIA A100 GPU?

The A100 typically costs between $10,000 and $15,000 per unit, depending on the vendor and system configuration.

10. Is the A100 suitable for startups and smaller enterprises?

Yes! With cloud-based access to A100 GPUs, startups can leverage enterprise-level AI power without the need for massive upfront hardware investments.

🔗 For more details, visit: NVIDIA Official Website

1 Comment

w52vjq